#Computer Vision

Deep Joint Unrolling for Deblurring and Low-Light Image Enhancement (JUDE)

WACV 2025

#Robotics #ML Applications

Enhancing OCR-based Indoor Place Recognition with Visitor Map Image by Mitigating Noise from Distracting Words

IROS 2024

#Reinforcement Learning #ML Applications

Sample-efficient inverse design of freeform nanophotonic devices with physics-informed reinforcement learning

Nanophotonics 2024

#Computer Vision

In-Season Wall-to-Wall Crop-Type Mapping Using Ensemble of Image Segmentation Models

IEEE Transactions on Geoscience and Remote Sensing (Volume 61)

2023.12.01

#Computer Vision

RCV2023 Challenges: Benchmarking Model Training and Inference for Resource-Constrained Deep Learning

ICCV 2023 workshop

2023.10.02

#ML Applications

Free-form optimization of nanophotonic devices: from classical methods to deep learning

Nanophotonics 2022

2022.01.12

#Reinforcement Learning #ML Applications

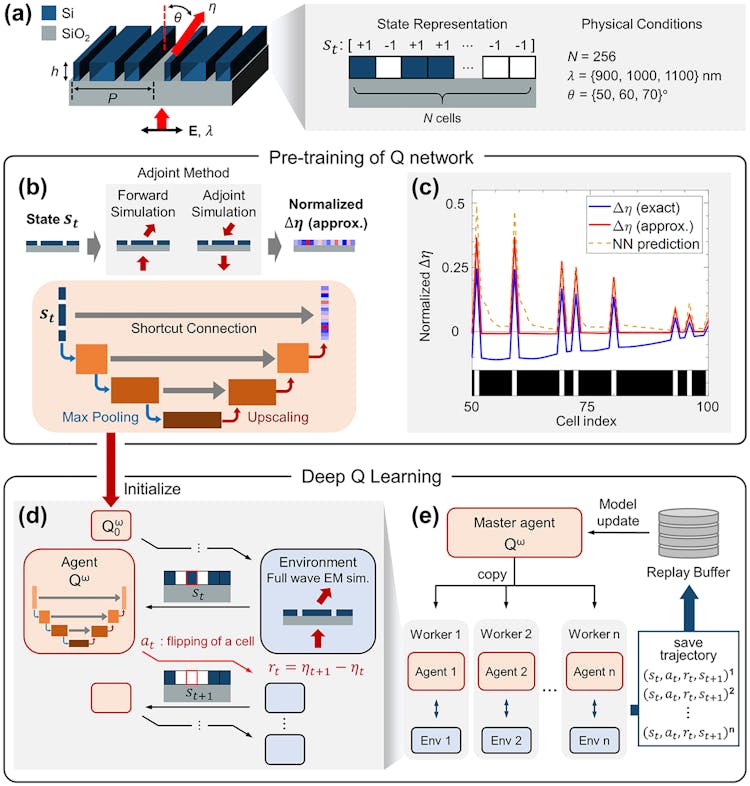

Structural optimization of a one-dimensional freeform metagrating deflector via deep reinforcement learning

ACS Photonics 2022

#ML Applications

Inverse design of organic light-emitting diode structure based on deep neural networks

Nanophotonics 2021

2021.11.04

#Reinforcement Learning #Publications

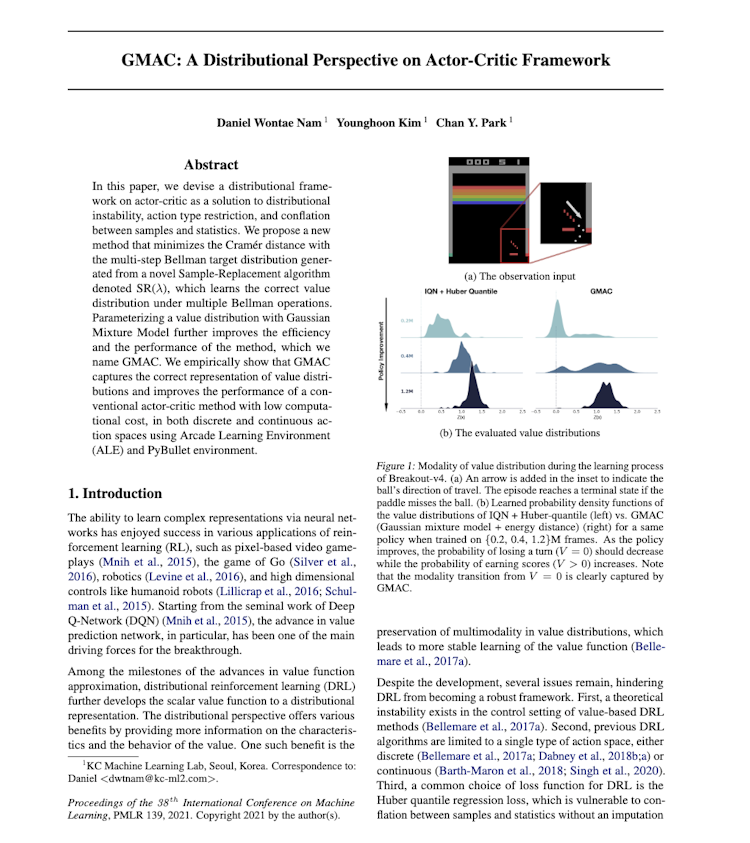

GMAC: A Distributional Perspective on Actor-Critic Framework

ICML 2021

#Computer Vision

Investigating Pixel Robustness using Input Gradients

2019.08.30

#Reinforcement Learning

Distilling Curiosity for Exploration

2019.07.29